On each server edit the sudoers file such that no password is required for sudo commands (required for k3sup to work)

%sudo ALL=(ALL:ALL) NOPASSWD: ALL

Install First Master server

k3sup install \ --host=1of3 \ --user=moorest \ --k3s-version=v1.21.5+k3s1 \ --local-path=borg.yaml \ --context borg \ --cluster \ --tls-san 10.68.0.70 \ --k3s-extra-args="--disable servicelb --node-taint node-role.kubernetes.io/master=true:NoSchedule"

Setup aliases and variables

alias k='kubectl' alias ns='kubectl config set-context --current --namespace ' export KUBECONFIG=/Users/moorest/borg.yaml

Log into master node 1 and install RBAC, Pull kube-vip image, setup variable, daemonset and cloud provider

ssh 1of3 curl -s https://kube-vip.io/manifests/rbac.yaml > /var/lib/rancher/k3s/server/manifests/kube-vip-rbac.yaml crictl pull docker.io/plndr/kube-vip:v0.4.1 export VIP=192.168.99.70 export INTERFACE=eno1 alias kube-vip="ctr run --rm --net-host docker.io/plndr/kube-vip:v0.4.1 vip /kube-vip" kube-vip manifest daemonset --arp --interface $INTERFACE --address $VIP --controlplane --leaderElection --taint --services --inCluster | tee /var/lib/rancher/k3s/server/manifests/kube-vip.yaml curl -sfL https://raw.githubusercontent.com/kube-vip/kube-vip-cloud-provider/main/manifest/kube-vip-cloud-controller.yaml > /var/lib/rancher/k3s/server/manifests/kube-vip-cloud-controller.yaml ping 192.168.99.70

Edit borg.yaml and replace server with 192.168.99.70

Join the other master nodes to the cluster

k3sup join \ --host=2of3 \ --server-user=moorest \ --k3s-version=v1.21.5+k3s1 \ --server-host=192.168.99.70 \ --server \ --user=moorest \ --k3s-extra-args="--disable servicelb --node-taint node-role.kubernetes.io/master=true:NoSchedule"

Repeat for 3of3, then join worker nodes

k3sup join \ --host=1of5 \ --server-user=moorest \ --server-host=192.168.99.70 \ --k3s-version=v1.21.5+k3s1 \ --user=moorest

Repeat for 2of5 through 5of5

Create kube-vip-config.yaml in /var/lib/rancher/k3s/server/manifests

--- apiVersion: v1 kind: ConfigMap metadata: name: kubevip namespace: kube-system data: range-global: 192.168.99.90-192.168.99.99

Install Helm on each master server

curl https://baltocdn.com/helm/signing.asc | apt-key add - apt-get install apt-transport-https --yes echo "deb https://baltocdn.com/helm/stable/debian/ all main" > /etc/apt/sources.list.d/helm-stable-debian.list apt-get update apt-get install helm

Configure remaining space on Worker nodes for Longhorn

vgdisplay -v ubuntu-vg

Use the free extents number as the length value in the next command:

lvcreate -n longhorn-lv -l 69403 ubuntu-vg mkfs -t ext4 /dev/mapper/ubuntu--vg-longhorn--lv mkdir /var/lib/longhorn echo "/dev/mapper/ubuntu--vg-longhorn--lv /var/lib/longhorn ext4 defaults 0 1" >> /etc/fstab mount /var/lib/longhorn

Install NFS server

apt-get install nfs-kernel-server

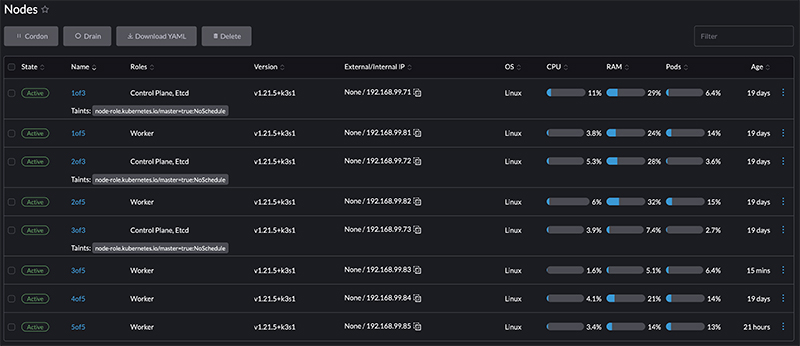

Here is a screenshot of my cluster from Rancher:

If a worker node needs to be replaced, completely or just a hard disk then all that is needed is:

- Drain and then delete the node from the cluster

- Replace the failed hardware

- Install a new OS

- Add the password-less sudo entry

- Configure the remaining disk space for Longhorn

- Install the NFS client

- Run the k3sup command to add the node to the cluster

Here is a current picture of my rack:

The loose cables are for when I remove the Dell R210 ii and replace it with a second CheckPoint T-180 for my pfSense HA cluster

The extra Lenovo tiny on the far right is an m93p that is running docker containers for Minecraft and Rancher

The various Lenovo tiny PCs and their power supplies are held in place my strips of velcro, the hook part in strips across the shelf and the loop part along the bottom edge of the PC and power supply.